[ad_1]

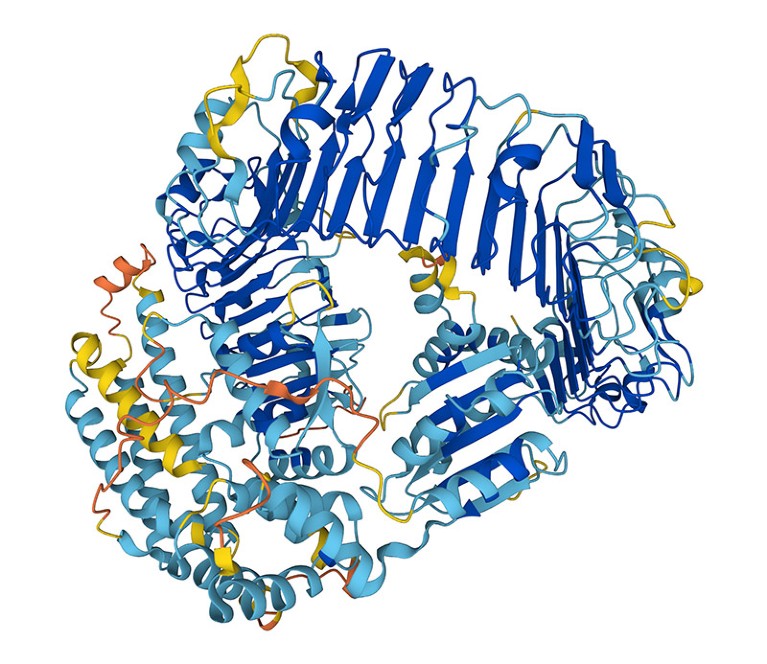

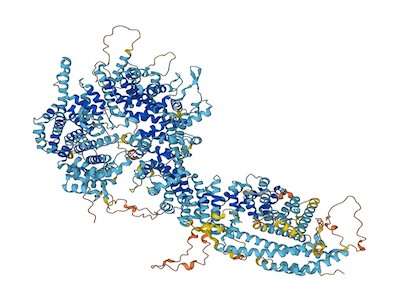

The AlphaFold AI tool can engineer proteins to perform specific functions.Credit: Google DeepMind/EMBL-EBI (CC-BY-4.0)

Could artificial intelligence (AI)-designed proteins ever be used as biological weapons? Hoping to avoid this possibility, as well as the prospect of burdensome government regulation, researchers today launched an initiative calling for the safe and ethical use of protein engineering.

“The potential benefits of protein engineering. [AI] “We’re far past the dangers at this point,” says David Baker, a computational biophysicist at the University of Washington in Seattle, who is part of the voluntary initiative. Dozens of other scientists applying AI to biological design have signed the initiative agreement. commitment list.

AI Tools Are Engineering Entirely New Proteins That Could Transform Medicine

“It’s a good start. I’ll sign it,” says Mark Dybul, a global health policy specialist at Georgetown University in Washington, DC, who led a 2023 report on AI and biosafety for the Helena think tank in Los Angeles, California. But he also thinks that “we need government measures and regulations, and not just voluntary guidance.”

The initiative comes on the heels of reports from the US Congress, think tanks and other organizations exploring the possibility that artificial intelligence tools – ranging from protein structure prediction networks like AlphaFold to large language models like the one powered by ChatGPT) can make it easier. develop biological weapons, including new highly transmissible toxins or viruses.

Dangers of designer proteins

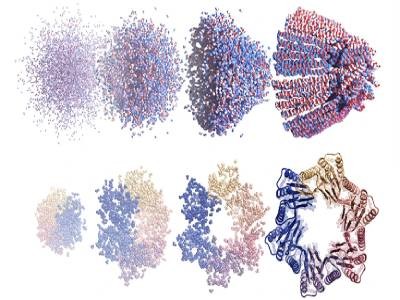

Researchers, including Baker and his colleagues, have been trying to design and produce new proteins for decades. But their ability to do so has exploded in recent years thanks to advances in AI. Efforts that previously took years or were impossible, such as designing a protein that binds to a specific molecule, can now be accomplished in minutes. Most of the artificial intelligence tools that scientists have developed to enable this are freely available.

To assess the potential for malicious use of designer proteins, the Baker Institute for Protein Design at the University of Washington hosted an AI Safety Summit in October 2023. “The question was: how should the protein design, if that somehow? What are the dangers, if any? says baker.

AlphaFold is touted as the next big thing in drug discovery, but is it?

The initiative he and dozens of other scientists in the United States, Europe and Asia are launching today requires the biodesign community to police itself. This includes periodically reviewing the capabilities of artificial intelligence tools and monitoring research practices. Baker would like to see his field establish a committee of experts to review the software before it is widely available and recommend “security measures” if necessary.

The initiative also calls for better detection of DNA synthesis, a key step in translating AI-designed proteins into real molecules. Currently, many companies that provide this service are registered with an industry group, the International Gene Synthesis Consortium (IGSC), which requires them to screen orders to identify harmful molecules such as toxins or pathogens.

“The best way to defend against AI threats is to have AI models that can detect those threats,” says James Diggans, head of biosecurity at Twist Bioscience, a DNA synthesis company in South San Francisco. California, and president of the IGSC.

risk assessment

Governments are also grappling with the biosafety risks posed by AI. In October 2023, US President Joe Biden signed an executive order in which he called for an assessment of such risks and raised the possibility of requiring DNA synthesis testing for federally funded research.

Baker hopes that government regulation is not in the future of this field; he says it could limit the development of drugs, vaccines and materials that AI-designed proteins could produce. Diggans adds that it’s unclear how protein design tools could be regulated, given the rapid pace of development. “It’s hard to imagine a regulation that’s appropriate one week and still appropriate the next.”

But David Relman, a microbiologist at Stanford University in California, says scientist-led efforts are not enough to ensure the safe use of AI. “Natural scientists alone cannot represent the interests of the general public.”