[ad_1]

As usual, Google I/O 2024 It is a real whirlwind of news and announcements. This year, instead of focusing on the hardware, Android or Chrome, Google spent most of this year’s developer conference convincing us that its AI Features It’s worth prioritizing. One such project is Project Astra, a multimodal AI assistant that you can semi-converse with and that can simultaneously use the camera to identify objects and people.

I say “semi” because it is evident after the demonstration that this part of Gemini is in its infancy. I spent a few brief minutes with Project Astra in the Pixel 8 Pro to see how it works in real time. I didn’t have enough time to test it to its full extent or try to trick it, but I did get an idea of what the future as an Android user would be like.

Ask him almost anything.

The goal of Project Astra is to be like an assistant that also guides you in the real world. You can answer questions about the environment around you by identifying objects, faces, moods and textiles. It can even help you remember where you last placed something.

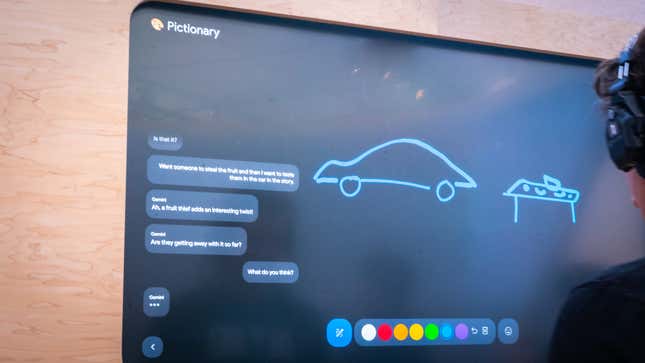

There were four different demos to choose from from Project Astra. They included Storyteller mode, which asks Gemini to make up a story based on multiple inputs, and Pictionary, essentially a computer doodle guessing game. There was also an alliteration mode, where the AI showed off its prowess at finding words with the same initial letter, and Free-Form let you chat back and forth.

The demo I got was a version of Free-Form on the Pixel 8 Pro. Another journalist in my group had requested it directly, so most of our demo focused on using the device together and this assistant-like mode .

With the camera pointed at another journalist, the Pixel 8 Pro, Gemini was able to identify that the subject was a person; we explicitly told him that the person identified as a man. She then correctly identified that he was carrying his phone. In a follow-up question, our group asked about his clothes. She gave a generalized response that she “seems to be wearing casual clothes.” We then asked what she was doing, to which Project Astra responded that it looked like she was putting on a pair of sunglasses (she was) and striking a casual pose.

I picked up the Pixel 8 Pro for a minute. I got Gemini to correctly identify a pot with artificial flowers. They were tulips. Gemini noticed that they were also colorful. From there, I wasn’t sure what else to provoke him, and then I ran out of time. I left with more questions than I had when I entered.

With Google AI, it seems like a leap of faith. I can see how identifying a person and their actions could be an accessibility tool to help someone who is blind or has low vision navigate the world around them. But that was not what this demonstration was about. It was to show the capabilities of Project Astra and how we will interact with it.

My biggest question is: Will something like Project Astra replace Google Assistant on Android devices? After all, this AI can remember where you put your stuff and pick up on nuances; At least, that’s what the demo conveys. I couldn’t get an answer from the few Google people I asked. But I have a strong feeling that the future of Android will depend less on tapping to interact with the phone and more on talking to it.